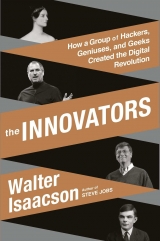

Текст книги "The Innovators: How a Group of Inventors, Hackers, Geniuses, and Geeks Created the Digital Revolution"

Автор книги: Walter Isaacson

Жанр:

Биографии и мемуары

сообщить о нарушении

Текущая страница: 9 (всего у книги 42 страниц)

John von Neumann (1903–57) in 1954.

Herman Goldstine (1913–2004) circa 1944.

Presper Eckert (center) and CBS’s Walter Cronkite (right) look at an election prediction from UNIVAC in 1952.

Most of us have been involved in group brainstorming sessions that produced creative ideas. Even a few days later, there may be different recollections of who suggested what first, and we realize that the formation of ideas was shaped more by the iterative interplay within the group than by an individual tossing in a wholly original concept. The sparks come from ideas rubbing against each other rather than as bolts out of the blue. This was true at Bell Labs, Los Alamos, Bletchley Park, and Penn. One of von Neumann’s great strengths was his talent—questioning, listening, gently floating tentative proposals, articulating, and collating—for being an impresario of such a collaborative creative process.

Von Neumann’s propensity to collect and collate ideas, and his lack of concern for pinning down precisely where they came from, was useful in sowing and fertilizing the concepts that became part of EDVAC. But it did sometimes rankle those more concerned about getting credit—or even intellectual property rights—where due. He once proclaimed that it was not possible to attribute the origination of ideas discussed in a group. Upon hearing that, Eckert is said to have responded, “Really?”58

The benefits and drawbacks of von Neumann’s approach became apparent in June 1945. After ten months of buzzing around the work being done at Penn, he offered to summarize their discussions on paper. And that is what he proceeded to do on a long train ride to Los Alamos.

In his handwritten report, which he mailed back to Goldstine at Penn, von Neumann described in mathematically dense detail the structure and logical control of the proposed stored-program computer and why it was “tempting to treat the entire memory as one organ.” When Eckert questioned why von Neumann seemed to be preparing a paper based on the ideas that others had helped to develop, Goldstine reassured him: “He’s just trying to get these things clear in his own mind and he’s done it by writing me letters so that we can write back if he hasn’t understood it properly.”59

Von Neumann had left blank spaces for inserting references to other people’s work, and his text never actually used the acronym EDVAC. But when Goldstine had the paper typed up (it ran to 101 pages), he ascribed sole authorship to his hero. The title page Goldstine composed called it “First Draft of a Report on the EDVAC, by John von Neumann.” Goldstine used a mimeograph machine to produce twenty-four copies, which he distributed at the end of June 1945.60

The “Draft Report” was an immensely useful document, and it guided the development of subsequent computers for at least a decade. Von Neumann’s decision to write it and allow Goldstine to distribute it reflected the openness of academic-oriented scientists, especially mathematicians, who tend to want to publish and disseminate rather than attempt to own intellectual property. “I certainly intend to do my part to keep as much of this field in the public domain (from the patent point of view) as I can,” von Neumann explained to a colleague. He had two purposes in writing the report, he later said: “to contribute to clarifying and coordinating the thinking of the group working on the EDVAC” and “to further the development of the art of building high speed computers.” He said that he was not trying to assert any ownership of the concepts, and he never applied for a patent on them.61

Eckert and Mauchly saw this differently. “You know, we finally regarded von Neumann as a huckster of other people’s ideas with Goldstine as his principal mission salesman,” Eckert later said. “Von Neumann was stealing ideas and trying to pretend work done at [Penn’s] Moore School was work he had done.”62 Jean Jennings agreed, later lamenting that Goldstine “enthusiastically supported von Neumann’s wrongful claims and essentially helped the man hijack the work of Eckert, Mauchly, and the others in the Moore School group.”63

What especially upset Mauchly and Eckert, who tried to patent many of the concepts behind both ENIAC and then EDVAC, was that the distribution of von Neumann’s report legally placed those concepts in the public domain. When Mauchly and Eckert tried to patent the architecture of a stored-program computer, they were stymied because (as both the Army’s lawyers and the courts eventually ruled) von Neumann’s report was deemed to be a “prior publication” of those ideas.

These patent disputes were the forerunner of a major issue of the digital era: Should intellectual property be shared freely and placed whenever possible into the public domain and open-source commons? That course, largely followed by the developers of the Internet and the Web, can spur innovation through the rapid dissemination and crowdsourced improvement of ideas. Or should intellectual property rights be protected and inventors allowed to profit from their proprietary ideas and innovations? That path, largely followed in the computer hardware, electronics, and semiconductor industries, can provide the financial incentives and capital investment that encourages innovation and rewards risks. In the seventy years since von Neumann effectively placed his “Draft Report” on the EDVAC into the public domain, the trend for computers has been, with a few notable exceptions, toward a more proprietary approach. In 2011 a milestone was reached: Apple and Google spent more on lawsuits and payments involving patents than they did on research and development of new products.64

THE PUBLIC UNVEILING OF ENIAC

Even as the team at Penn was designing EDVAC, they were still scrambling to get its predecessor, ENIAC, up and running. That occurred in the fall of 1945.

By then the war was over. There was no need to compute artillery trajectories, but ENIAC’s first task nevertheless involved weaponry. The secret assignment came from Los Alamos, the atomic weapons lab in New Mexico, where the Hungarian-born theoretical physicist Edward Teller had devised a proposal for a hydrogen bomb, dubbed “the Super,” in which a fission atomic device would be used to create a fusion reaction. To determine how this would work, the scientists needed to calculate what the force of the reactions would be at every ten-millionth of a second.

The nature of the problem was highly classified, but the mammoth equations were brought to Penn in October for ENIAC to crunch. It required almost a million punch cards to input the data, and Jennings was summoned to the ENIAC room with some of her colleagues so that Goldstine could direct the process of setting it up. ENIAC solved the equations, and in doing so showed that Teller’s design was flawed. The mathematician and Polish refugee Stanislaw Ulam subsequently worked with Teller (and Klaus Fuchs, who turned out to be a Russian spy) to modify the hydrogen bomb concept, based on the ENIAC results, so that it could produce a massive thermonuclear reaction.65

Until such classified tasks were completed, ENIAC was kept under wraps. It was not shown to the public until February 15, 1946, when the Army and Penn scheduled a gala presentation with some press previews leading up to it.66 Captain Goldstine decided that the centerpiece of the unveiling would be a demonstration of a missile trajectory calculation. So two weeks in advance, he invited Jean Jennings and Betty Snyder to his apartment and, as Adele served tea, asked them if they could program ENIAC to do this in time. “We sure could,” Jennings pledged. She was excited. It would allow them to get their hands directly on the machine, which was rare.67 They set to work plugging memory buses into the correct units and setting up program trays.

The men knew that the success of their demonstration was in the hands of these two women. Mauchly came by one Saturday with a bottle of apricot brandy to keep them fortified. “It was delicious,” Jennings recalled. “From that day forward, I always kept a bottle of apricot brandy in my cupboard.” A few days later, the dean of the engineering school brought them a paper bag containing a fifth of whiskey. “Keep up the good work,” he told them. Snyder and Jennings were not big drinkers, but the gifts served their purpose. “It impressed us with the importance of this demonstration,” said Jennings.68

The night before the demonstration was Valentine’s Day, but despite their normally active social lives, Snyder and Jennings did not celebrate. “Instead, we were holed up with that wonderful machine, the ENIAC, busily making the last corrections and checks on the program,” Jennings recounted. There was one stubborn glitch they couldn’t figure out: the program did a wonderful job spewing out data on the trajectory of artillery shells, but it just didn’t know when to stop. Even after the shell would have hit the ground, the program kept calculating its trajectory, “like a hypothetical shell burrowing through the ground at the same rate it had traveled through the air,” as Jennings described it. “Unless we solved that problem, we knew the demonstration would be a dud, and the ENIAC’s inventors and engineers would be embarrassed.”69

Jennings and Snyder worked late into the evening before the press briefing trying to fix it, but they couldn’t. They finally gave up at midnight, when Snyder needed to catch the last train to her suburban apartment. But after she went to bed, Snyder figured it out: “I woke up in the middle of the night thinking what that error was. . . . I came in, made a special trip on the early train that morning to look at a certain wire.” The problem was that there was a setting at the end of a “do loop” that was one digit off. She flipped the requisite switch and the glitch was fixed. “Betty could do more logical reasoning while she was asleep than most people can do awake,” Jennings later marveled. “While she slept, her subconscious untangled the knot that her conscious mind had been unable to.”70

At the demonstration, ENIAC was able to spew out in fifteen seconds a set of missile trajectory calculations that would have taken human computers, even working with a Differential Analyzer, several weeks. It was all very dramatic. Mauchly and Eckert, like good innovators, knew how to put on a show. The tips of the vacuum tubes in the ENIAC accumulators, which were arranged in 10 x 10 grids, poked through holes in the machine’s front panel. But the faint light from the neon bulbs, which served as indicator lights, was barely visible. So Eckert got Ping-Pong balls, cut them in half, wrote numbers on them, and placed them over the bulbs. As the computer began processing the data, the lights in the room were turned off so that the audience would be awed by the blinking Ping-Pong balls, a spectacle that became a staple of movies and TV shows. “As the trajectory was being calculated, numbers built up in the accumulators and were transferred from place to place, and the lights started flashing like the bulbs on the marquees in Las Vegas,” said Jennings. “We had done what we set out to do. We had programmed the ENIAC.”71 That bears repeating: they had programmed the ENIAC.

The unveiling of ENIAC made the front page of the New York Times under the headline “Electronic Computer Flashes Answers, May Speed Engineering.” The story began, “One of the war’s top secrets, an amazing machine which applies electronic speeds for the first time to mathematical tasks hitherto too difficult and cumbersome for solution, was announced here tonight by the War Department.”72 The report continued inside the Times for a full page, with pictures of Mauchly, Eckert, and the room-size ENIAC. Mauchly proclaimed that the machine would lead to better weather predictions (his original passion), airplane design, and “projectiles operating at supersonic speeds.” The Associated Press story reported an even grander vision, declaring, “The robot opened the mathematical way to better living for every man.”73 As an example of “better living,” Mauchly asserted that computers might one day serve to lower the cost of a loaf of bread. How that would happen he did not explain, but it and millions of other such ramifications did in fact eventually transpire.

Later Jennings complained, in the tradition of Ada Lovelace, that many of the newspaper reports overstated what ENIAC could do by calling it a “giant brain” and implying that it could think. “The ENIAC wasn’t a brain in any sense,” she insisted. “It couldn’t reason, as computers still cannot reason, but it could give people more data to use in reasoning.”

Jennings had another complaint that was more personal: “Betty and I were ignored and forgotten following the demonstration. We felt as if we had been playing parts in a fascinating movie that suddenly took a bad turn, in which we had worked like dogs for two weeks to produce something really spectacular and then were written out of the script.” That night there was a candle-lit dinner at Penn’s venerable Houston Hall. It was filled with scientific luminaries, military brass, and most of the men who had worked on ENIAC. But Jean Jennings and Betty Snyder were not there, nor were any of the other women programmers.74 “Betty and I weren’t invited,” Jennings said, “so we were sort of horrified.”75 While the men and various dignitaries celebrated, Jennings and Snyder made their way home alone through a very cold February night.

THE FIRST STORED-PROGRAM COMPUTERS

The desire of Mauchly and Eckert to patent—and profit from—what they had helped to invent caused problems at Penn, which did not yet have a clear policy for divvying up intellectual property rights. They were allowed to apply for patents on ENIAC, but the university then insisted on getting royalty-free licenses as well as the right to sublicense all aspects of the design. Furthermore, the parties couldn’t agree on who would have rights to the innovations on EDVAC. The wrangling was complex, but the upshot was that Mauchly and Eckert left Penn at the end of March 1946.76

They formed what became the Eckert-Mauchly Computer Corporation, based in Philadelphia, and were pioneers in turning computing from an academic to a commercial endeavor. (In 1950 their company, along with the patents they would be granted, became part of Remington Rand, which morphed into Sperry Rand and then Unisys.) Among the machines they built was UNIVAC, which was purchased by the Census Bureau and other clients, including General Electric.

With its flashing lights and Hollywood aura, UNIVAC became famous when CBS featured it on election night in 1952. Walter Cronkite, the young anchor of the network’s coverage, was dubious that the huge machine would be much use compared to the expertise of the network’s correspondents, but he agreed that it might provide an amusing spectacle for viewers. Mauchly and Eckert enlisted a Penn statistician, and they worked out a program that compared the early results from some sample precincts to the outcomes in previous elections. By 8:30 p.m. on the East Coast, well before most of the nation’s polls had closed, UNIVAC predicted, with 100-to-1 certainty, an easy win for Dwight Eisenhower over Adlai Stevenson. CBS initially withheld UNIVAC’s verdict; Cronkite told his audience that the computer had not yet reached a conclusion. Later that night, though, after the vote counting confirmed that Eisenhower had won handily, Cronkite put the correspondent Charles Collingwood on the air to admit that UNIVAC had made the prediction at the beginning of the evening but CBS had not aired it. UNIVAC became a celebrity and a fixture on future election nights.77

Eckert and Mauchly did not forget the importance of the women programmers who had worked with them at Penn, even though they had not been invited to the dedication dinner for ENIAC. They hired Betty Snyder, who, under her married name, Betty Holberton, went on to become a pioneer programmer who helped develop the COBOL and Fortran languages, and Jean Jennings, who married an engineer and became Jean Jennings Bartik. Mauchly also wanted to recruit Kay McNulty, but after his wife died in a drowning accident he proposed marriage to her instead. They had five children, and she continued to help on software design for UNIVAC.

Mauchly also hired the dean of them all, Grace Hopper. “He let people try things,” Hopper replied when asked why she let him talk her into joining the Eckert-Mauchly Computer Corporation. “He encouraged innovation.”78 By 1952 she had created the world’s first workable compiler, known as the A-0 system, which translated symbolic mathematical code into machine language and thus made it easier for ordinary folks to write programs.

Like a salty crew member, Hopper valued an all-hands-on-deck style of collaboration, and she helped develop the open-source method of innovation by sending out her initial versions of the compiler to her friends and acquaintances in the programming world and asking them to make improvements. She used the same open development process when she served as the technical lead in coordinating the creation of COBOL, the first cross-platform standardized business language for computers.79 Her instinct that programming should be machine-independent was a reflection of her preference for collegiality; even machines, she felt, should work well together. It also showed her early understanding of a defining fact of the computer age: that hardware would become commoditized and that programming would be where the true value resided. Until Bill Gates came along, it was an insight that eluded most of the men.IV

Von Neumann was disdainful of the Eckert-Mauchly mercenary approach. “Eckert and Mauchly are a commercial group with a commercial patent policy,” he complained to a friend. “We cannot work with them directly or indirectly in the same open manner in which we would work with an academic group.”80 But for all of his righteousness, von Neumann was not above making money off his ideas. In 1945 he negotiated a personal consulting contract with IBM, giving the company rights to any inventions he made. It was a perfectly valid arrangement. Nevertheless, it outraged Eckert and Mauchly. “He sold all our ideas through the back door to IBM,” Eckert complained. “He spoke with a forked tongue. He said one thing and did something else. He was not to be trusted.”81

After Mauchly and Eckert left, Penn rapidly lost its role as a center of innovation. Von Neumann also left, to return to the Institute for Advanced Study in Princeton. He took with him Herman and Adele Goldstine, along with key engineers such as Arthur Burks. “Perhaps institutions as well as people can become fatigued,” Herman Goldstine later reflected on the demise of Penn as the epicenter of computer development.82 Computers were considered a tool, not a subject for scholarly study. Few of the faculty realized that computer science would grow into an academic discipline even more important than electrical engineering.

Despite the exodus, Penn was able to play one more critical role in the development of computers. In July 1946 most of the experts in the field—including von Neumann, Goldstine, Eckert, Mauchly, and others who had been feuding—returned for a series of talks and seminars, called the Moore School Lectures, that would disseminate their knowledge about computing. The eight-week series attracted Howard Aiken, George Stibitz, Douglas Hartree of Manchester University, and Maurice Wilkes of Cambridge. A primary focus was the importance of using stored-program architecture if computers were to fulfill Turing’s vision of being universal machines. As a result, the design ideas developed collaboratively by Mauchly, Eckert, von Neumann, and others at Penn became the foundation for most future computers.

The distinction of being the first stored-program computers went to two machines that were completed, almost simultaneously, in the summer of 1948. One of them was an update of the original ENIAC. Von Neumann and Goldstine, along with the engineers Nick Metropolis and Richard Clippinger, worked out a way to use three of ENIAC’s function tables to store a rudimentary set of instructions.83 Those function tables had been used to store data about the drag on an artillery shell, but that memory space could be used for other purposes since the machine was no longer being used to calculate trajectory tables. Once again, the actual programming work was done largely by the women: Adele Goldstine, Klára von Neumann, and Jean Jennings Bartik. “I worked again with Adele when we developed, along with others, the original version of the code required to turn ENIAC into a stored-program computer using the function tables to store the coded instructions,” Bartik recalled.84

This reconfigured ENIAC, which became operational in April 1948, had a read-only memory, which meant that it was hard to modify programs while they were running. In addition, its mercury delay line memory was sluggish and required precision engineering. Both of these drawbacks were avoided in a small machine at Manchester University in England that was built from scratch to function as a stored-program computer. Dubbed “the Manchester Baby,” it became operational in June 1948.

Manchester’s computing lab was run by Max Newman, Turing’s mentor, and the primary work on the new computer was done by Frederic Calland Williams and Thomas Kilburn. Williams invented a storage mechanism using cathode-ray tubes, which made the machine faster and simpler than ones using mercury delay lines. It worked so well that it led to the more powerful Manchester Mark I, which became operational in April 1949, as well as the EDSAC, completed by Maurice Wilkes and a team at Cambridge that May.85

As these machines were being developed, Turing was also trying to develop a stored-program computer. After leaving Bletchley Park, he joined the National Physical Laboratory, a prestigious institute in London, where he designed a computer named the Automatic Computing Engine in homage to Babbage’s two engines. But progress on ACE was fitful. By 1948 Turing was fed up with the pace and frustrated that his colleagues had no interest in pushing the bounds of machine learning and artificial intelligence, so he left to join Max Newman at Manchester.86

Likewise, von Neumann embarked on developing a stored-program computer as soon as he settled at the Institute for Advanced Study in Princeton in 1946, an endeavor chronicled in George Dyson’s Turing’s Cathedral. The Institute’s director, Frank Aydelotte, and its most influential faculty trustee, Oswald Veblen, were staunch supporters of what became known as the IAS Machine, fending off criticism from other faculty that building a computing machine would demean the mission of what was supposed to be a haven for theoretical thinking. “He clearly stunned, or even horrified, some of his mathematical colleagues of the most erudite abstraction, by openly professing his great interest in other mathematical tools than the blackboard and chalk or pencil and paper,” von Neumann’s wife, Klára, recalled. “His proposal to build an electronic computing machine under the sacred dome of the Institute was not received with applause to say the least.”87

Von Neumann’s team members were stashed in an area that would have been used by the logician Kurt Gödel’s secretary, except he didn’t want one. Throughout 1946 they published detailed papers about their design, which they sent to the Library of Congress and the U.S. Patent Office, not with applications for patents but with affidavits saying they wanted the work to be in the public domain.

Their machine became fully operational in 1952, but it was slowly abandoned after von Neumann left for Washington to join the Atomic Energy Commission. “The demise of our computer group was a disaster not only for Princeton but for science as a whole,” said the physicist Freeman Dyson, a member of the Institute (and George Dyson’s father). “It meant that there did not exist at that critical period in the 1950s an academic center where computer people of all kinds could get together at the highest intellectual level.”88 Instead, beginning in the 1950s, innovation in computing shifted to the corporate realm, led by companies such as Ferranti, IBM, Remington Rand, and Honeywell.

That shift takes us back to the issue of patent protections. If von Neumann and his team had continued to pioneer innovations and put them in the public domain, would such an open-source model of development have led to faster improvements in computers? Or did marketplace competition and the financial rewards for creating intellectual property do more to spur innovation? In the cases of the Internet, the Web, and some forms of software, the open model would turn out to work better. But when it came to hardware, such as computers and microchips, a proprietary system provided incentives for a spurt of innovation in the 1950s. The reason the proprietary approach worked well, especially for computers, was that large industrial organizations, which needed to raise working capital, were best at handling the research, development, manufacturing, and marketing for such machines. In addition, until the mid-1990s, patent protection was easier to obtain for hardware than it was for software.V However, there was a downside to the patent protection given to hardware innovation: the proprietary model produced companies that were so entrenched and defensive that they would miss out on the personal computer revolution in the early 1970s.

CAN MACHINES THINK?

As he thought about the development of stored-program computers, Alan Turing turned his attention to the assertion that Ada Lovelace had made a century earlier, in her final “Note” on Babbage’s Analytical Engine: that machines could not really think. If a machine could modify its own program based on the information it processed, Turing asked, wouldn’t that be a form of learning? Might that lead to artificial intelligence?

The issues surrounding artificial intelligence go back to the ancients. So do the related questions involving human consciousness. As with most questions of this sort, Descartes was instrumental in framing them in modern terms. In his 1637 Discourse on the Method, which contains his famous assertion “I think, therefore I am,” Descartes wrote:

If there were machines that bore a resemblance to our bodies and imitated our actions as closely as possible for all practical purposes, we should still have two very certain means of recognizing that they were not real humans. The first is that . . . it is not conceivable that such a machine should produce arrangements of words so as to give an appropriately meaningful answer to whatever is said in its presence, as the dullest of men can do. Secondly, even though some machines might do some things as well as we do them, or perhaps even better, they would inevitably fail in others, which would reveal that they are acting not from understanding.

Turing had long been interested in the way computers might replicate the workings of a human brain, and this curiosity was furthered by his work on machines that deciphered coded language. In early 1943, as Colossus was being designed at Bletchley Park, Turing sailed across the Atlantic on a mission to Bell Laboratories in lower Manhattan, where he consulted with the group working on electronic speech encipherment, the technology that could electronically scramble and unscramble telephone conversations.

There he met the colorful genius Claude Shannon, the former MIT graduate student who wrote the seminal master’s thesis in 1937 that showed how Boolean algebra, which rendered logical propositions into equations, could be performed by electronic circuits. Shannon and Turing began meeting for tea and long conversations in the afternoons. Both were interested in brain science, and they realized that their 1937 papers had something fundamental in common: they showed how a machine, operating with simple binary instructions, could tackle not only math problems but all of logic. And since logic was the basis for how human brains reasoned, then a machine could, in theory, replicate human intelligence.

“Shannon wants to feed not just data to [a machine], but cultural things!” Turing told Bell Lab colleagues at lunch one day. “He wants to play music to it!” At another lunch in the Bell Labs dining room, Turing held forth in his high-pitched voice, audible to all the executives in the room: “No, I’m not interested in developing a powerful brain. All I’m after is just a mediocre brain, something like the President of the American Telephone and Telegraph Company.”89

When Turing returned to Bletchley Park in April 1943, he became friends with a colleague named Donald Michie, and they spent many evenings playing chess in a nearby pub. As they discussed the possibility of creating a chess-playing computer, Turing approached the problem not by thinking of ways to use brute processing power to calculate every possible move; instead he focused on the possibility that a machine might learn how to play chess by repeated practice. In other words, it might be able to try new gambits and refine its strategy with every new win or loss. This approach, if successful, would represent a fundamental leap that would have dazzled Ada Lovelace: machines would be able to do more than merely follow the specific instructions given them by humans; they could learn from experience and refine their own instructions.

“It has been said that computing machines can only carry out the purposes that they are instructed to do,” he explained in a talk to the London Mathematical Society in February 1947. “But is it necessary that they should always be used in such a manner?” He then discussed the implications of the new stored-program computers that could modify their own instruction tables. “It would be like a pupil who had learnt much from his master, but had added much more by his own work. When this happens I feel that one is obliged to regard the machine as showing intelligence.”90