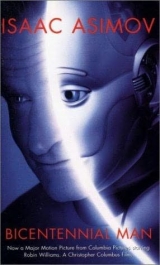

Текст книги "The Bicentennial Man and Other Stories"

Автор книги: Isaac Asimov

Жанр:

Научная фантастика

сообщить о нарушении

Текущая страница: 5 (всего у книги 18 страниц)

Annette said, "I'm not babbling. You know it took more than rocket ships to colonize the Moon. To make a successful colony possible, men had.to be altered genetically and adjusted to low gravity. You are a product of such genetic engineering."

"Well?"

"And might not genetic engineering also help men to greater gravitational pull? What is the largest planet of the Solar System, Mr. Demerest?"

"Jupi-"

"Yes, Jupiter. Eleven times the diameter of the Earth; forty times the diameter of the Moon. A surface a hundred and twenty times that of the Earth in area; sixteen hundred times that of the Moon. Conditions so different from anything we can encounter anywhere on the worlds the size of Earth or less that any scientist of any persuasion would give half his life for a chance to observe at close range."

"But Jupiter is an impossible target."

"Indeed?" said Annette, and even managed a faint smile. "As impossible as flying? Why is it impossible? Genetic engineering could design men with stronger and denser bones, stronger and more compact muscles. The same principles that enclose Luna City against the vacuum and Ocean-Deep against the sea can also enclose the future Jupiter-Deep against its ammoniated surroundings."

"The gravitational field-"

"Can be negotiated by nuclear-powered ships that are now on the drawing board. You don't know that but I do."

"We're not even sure about the depth of the atmosphere. The pressures-"

"The pressures! The pressures! Mr. Demerest, look about you. Why do you suppose Ocean-Deep was really built? To exploit the ocean? The settlements on the continental shelf are doing that quite adequately. To gain knowledge of the deep-sea bottom? We could do that by 'scaphe easily and we could then have spared the hundred billion dollars invested in Ocean-Deep so far.

"Don't you see, Mr. Demerest, that Ocean-Deep must mean something more than that? The purpose' of Ocean-Deep is to devise the ultimate vessels and mechanisms that will suffice to explore and colonize Jupiter. Look about you and see the beginnings of a Jovian environment; the closest approach we can come to it on Earth. It is only a faint image of mighty Jupiter, but it's a beginning.

"Destroy this, Mr. Demerest, and you destroy any hope for Jupiter. On the other hand, let us live and we will, together, penetrate and settle the brightest jewel of the Solar System. And long before we can reach the limits of Jupiter, we will be ready for the stars, for the Earth-type planets circling them, and the Jupiter-type planets, too. Luna City won't be abandoned because both are necessary for this ultimate aim."

For the moment, Demerest had altogether forgotten about that last button. He said, "Nobody on Luna City has heard of this."

"You haven't. There are those on Luna City who know. If you had told them of your plan of destruction, they would have stopped you. Naturally, we can't make this common knowledge and only a few people anywhere can know. The public supports only with difficulty the planetary projects now in progress. If the PPC is parsimonious it is because public opinion limits its generosity. What do you suppose public opinion would say if they thought we were aiming toward Jupiter? What a super-boondoggle that would be in their eyes. But we continue and what money we can save and make use of we place in the various facets of Project Big World."

"Project Big World?"

"Yes," said Annette. "You know now and I have committed a serious security breach. But it doesn't matter, does it? Since we're all dead and since the project is, too."

"Wait. now, Mrs. Bergeh."

"If you change your mind now, don't think you can ever talk about Project Big World. That would end the project just as effectively as destruction here would. And it would end both your career and mine. It might end Luna City and Ocean-Deep, too-so now that you know, maybe it makes no difference anyway. You might just as well push that button."

"I said wait-" Demerest's brow was furrowed and his eyes burned with anguish. "I don't know-"

Bergen gathered for the sudden jump as Demerest's tense alertness wavered into uncertain introspection, but Annette grasped her husband's sleeve.

A timeless interval that might have been ten seconds long followed and then Demerest held out his laser. "Take it," he said. "I'll consider myself under arrest."

"You can't be arrested," said Annette, "without the whole story coming out." She took the laser and gave it to Bergen. "It will be enough that you return to Luna City and keep silent. Till then we will keep you.under guard."

Bergen was at the manual controls. The inner door slid shut and after that there was the thunderous waterclap of the water returning into the lock.

Husband and wife were alone again. They had not dared say a word until Demerest was safely put to sleep under the watchful eyes of two men detailed for the purpose. The unexpected waterclap had roused everybody and a sharply bowdlerized account of the incident had been given out.

The manual controls were now locked off and Bergen said, "From this point on, the manuals:will have to be adjusted to fail-safe. And visitors will have to be searched."

"Oh, John," said Annette. "I think people are insane. There we were, facing death for us and for Ocean-Deep; just the end of everything. And I kept thinking-1 must keep calm; I mustn't have a miscarriage."

"You kept calm all right. You were magnificent. I mean, Project Big World! I never conceived of such a thing, but by -by-Jove, it's an attractive thought. It's wonderful."

"I'm sorry I had to say all that, John. It was all a fake, of course. I made it up, Demerest wanted me to make something up really. He wasn't a killer or destroyer; he was, according to his own overheated lights, a patriot, and I suppose he was telling himself he must destroy in order to save -a common enough view among the small-minded. But he said he would give us time to talk him out of it and I think he was praying we would manage to do so. He wanted us to think of something that would give him the excuse to save in order to save, and I gave it to him. I'm sorry I had to fool you, John."

"You didn't fool me."

"I didn't?"

"How could you? I knew you weren't a member of PPC."

"What made you so sure of that? Because I'm a woman?"

"Not at all. Because I'm a member, Annette, and that's confidential. And, if you don't mind, I will begin a move to initiate exactly what you suggested-Project Big World."

"Well!" Annette considered that and, slowly, smiled. "Well! That's not bad. Women do have their uses."

"Something," said Bergen, smiling also, "I have never denied."

***

Ed Ferman of F amp; SF and Barry Malzberg, one of the brightest of the new generation of science fiction writers, had it in mind in early 1973 to prepare an anthology in which a number of different science fiction themes were carried to their ultimate conclusion. For each story they tapped some writer who was associated with a particular theme, and for a story on the subject of robotics, they wanted me, naturally.

I tried to beg off with my usual excuses concerning the state of my schedule, but they said if I didn't do it there would be no story on robotics at all, because they wouldn't ask anyone else. That shamed me into agreeing to do it.

I then had to think up a way of reaching an ultimate conclusion. There had always been one aspect of the robot theme I had never had the courage to write, although the late John Campbell and I had sometimes discussed it.

In the first two Laws of Robotics, you see, the expression "human being" is used, and the assumption is that a robot can recognize a human being when he sees one. But what is a human being? Or, as the Psalmist asks of God, "What is man that thou art mindful of him?"

Surely, if there's any doubt as to the definition of man, the Laws of Robotics don't necessarily hold. So I wrote THAT THOU ART MINDFUL OF HIM, and Ed and Barry were very happy with it-and so was I. It not only appeared in the anthology, which was entitled Final Stage, but was also published in the May 1974 issue of F amp; SF.

That Thou Art Mindful of Him

The Three Laws of Robotics:

1. A robot may not injure a human being or, through inaction, allow a human being to come to harm.

2. A robot must obey the orders given it by human beings except where such orders would conflict with the First Law.

3. A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

1.

Keith Harriman, who had for twelve years now been Director of Research at United States Robots and Mechanical Men Corporation, found that he was not at all certain whether he was doing right. The tip of his tongue passed over his plump but rather pale lips and it seemed to him that the holographic image of the great Susan Calvin, which stared unsmilingly down upon him, had never looked so grim before.

Usually he blanked out that image of the greatest roboticist in history because she unnerved him. (He tried thinking of the image as "it" but never quite succeeded.) This time he didn't quite dare to and her long-dead gaze bored into the side of his face.

It was a dreadful and demeaning step he would have to take. Opposite him was George Ten, calm and unaffected either by

Harriman's patent uneasiness or by the image of the patron saint of robotics glowing in its niche above.

Harriman said, "We haven't had a chance to talk this out, really, George. You haven't been with us that long and I haven't had a good chance to be alone with you. But now I would like to discuss the matter in some detail."

"I am perfectly willing to do that, " said George. "In my stay at U. S. Robots, I have gathered the crisis has something to do with the Three Laws."

"Yes. You know the Three Laws, of course."

"I do."

"Yes, I'm sure you do. But let us dig even deeper and consider the truly basic problem. In two centuries of, if I may say so, considerable success, U. S. Robots has never managed to persuade human beings to accept robots. We have placed robots only where work is required that human beings cannot do, or in environments that human beings find unacceptably dangerous. Robots have worked mainly in space and that has limited what we have been able to do."

"Surely," said George Ten, "that represents a broad limit, and one within which U. S. Robots can prosper."

"No, for two reasons. In the first place, the boundaries set for us inevitably contract. As the Moon colony, for instance, grows more sophisticated, its demand for robots decreases and we expect that, within the next few years, robots will be banned on the Moon. This will be repeated on every world colonized by mankind. Secondly, true prosperity is impossible without robots on Earth. We at U. S. Robots firmly believe that human beings need robots and must learn to live with their mechanical analogues if progress is to be maintained."

"Do they not? Mr. Harriman, you have on your desk a computer input which, I understand, is connected with the organization's Multivac. A computer is a kind of sessile robot; a robot brain not attached to a body-"

"True, but that also is limited. The computers used by mankind have been steadily specialized in order to avoid too humanlike an intelligence. A century ago we were well on the way to artificial intelligence of the most unlimited type through the use of great computers we called Machines. Those Machines limited their action of their own accord. Once they had solved the ecological problems that had threatened human society, they phased themselves out. Their own continued existence would, they reasoned, have placed them in the role of a crutch to mankind and, since they felt this would harm human beings, they condemned themselves by the First Law."

"And were they not correct to do so?"

"In my opinion, no. By their action, they reinforced mankind's Frankenstein complex; its gut fears that any artificial man they created would turn upon its creator. Men fear that robots may replace human beings."

"Do you not fear that yourself?"

"I know better. As long as the Three Laws of Robotics exist, they cannot. They can serve as partners of mankind; they can share in the great struggle to understand and wisely direct the laws of nature so that together they can do more than mankind can possibly do alone; but always in such a way that robots serve human beings."

"But if the Three Laws have shown themselves, over the course of two centuries, to keep robots within bounds, what is the source of the distrust of human beings for robots?"

"Well"-and Harriman's graying hair tufted as he scratched his head vigorously-"mostly superstition, of course. Unfortunately, there are also some complexities involved that anti-robot agitators seize upon."

"Involving the Three Laws?"

"Yes. The Second Law in particular. There's no problem in the Third Law, you see. It is universal. Robots must always sacrifice themselves for human beings, any human beings."

"Of course," said George Ten.

"The First Law is perhaps less satisfactory, since it is always possible to imagine a condition in which a robot must perform either Action A or Action B, the two being mutually exclusive, and where either action results in harm to human beings. The robot must therefore quickly select which action results in the least harm. To work out the positronic paths of the robot brain in such a way as to make that selection possible is not easy. If Action A results in harm to a talented young artist and B results in equivalent harm to five elderly people of no particular worth, which action should be chosen."

"Action A, " said George Ten. "Harm to one is less than harm to five."

"Yes, so robots have always been designed to decide. To expect robots to make judgments of fine points such as talent, intelligence, the general usefulness to society, has always seemed impractical. That would delay decision to the point where the robot is effectively immobilized. So we go by numbers. Fortunately, we might expect crises in which robots must make such decisions to be few…But then that brings us to the Second Law."

"The Law of Obedience."

"Yes. The necessity of obedience is constant. A robot may exist for twenty years without every having to act quickly to prevent harm to a human being, or find itself faced with the necessity of risking its own destruction. In all that time, however, it will be constantly obeying orders…Whose orders?"

"Those of a human being."

"Any human being? How do you judge a human being so as to know whether to obey or not? What is man, that thou art mindful of him, George?"

George hesitated at that.

Harriman said hurriedly, "A Biblical quotation. That doesn't matter. I mean, must a robot follow the orders of a child; or of an idiot; or of a criminal; or of a perfectly decent intelligent man who happens to be inexpert and therefore ignorant of the undesirable consequences of his order? And if two human beings give a robot conflicting orders, which does the robot follow?"

"In two hundred years," said George Ten, "have not these problems arisen and been solved?"

"No," said Harriman, shaking his head violently. "We have been hampered by the very fact that our robots have been used only in specialized environments out in space, where the men who dealt with them were experts in their field. There were no children, no idiots, no criminals, no well-meaning ignoramuses present. Even so, there were occasions when damage was done by foolish or merely unthinking orders. Such damage in specialized and limited environments could be contained. On Earth, however, robots must have judgment. So those against robots maintain, and, damn it, they are right."

"Then you must insert the capacity for judgment into the positronic brain."

"Exactly. We have begun to reproduce JG models in which the robot can weigh every human being with regard to sex, age, social and professional position, intelligence, maturity, social responsibility and so on."

"How would that affect the Three Laws?"

"The Third Law not at all. Even the most valuable robot must destroy himself for the sake of the most useless human being. That cannot be tampered with. The First Law is affected only where alternative actions will all do harm. The quality of the human beings involved as well as the quantity must be considered, provided there is time for such judgment and the basis for it, which will not be often. The Second Law will be most deeply modified, since every potential obedience must involve judgment. The robot will be slower to obey, except where the First Law is also involved, but it will obey more rationally."

"But the judgments which are required are very complicated."

"Very. The necessity of making such judgments slowed the reactions of our first couple of models to the point of paralysis. We improved matters in the later models at the cost of introducing so many pathways that the robot's brain became far too unwieldy. In our last couple of models, however, I think we have what we want. The robot doesn't have to make an instant judgment of the worth of a human being and the value of its orders. It begins by obeying all human beings as any ordinary robot would and then it learns. A robot grows, learns and matures. It is the equivalent of a child at first and must be under constant supervision. As it grows, however, it can, more and more, be allowed, unsupervised, into Earth's society. Finally, it is a full member of that society."

"Surely this answers the objections of those who oppose robots."

"No," said Harriman angrily. "Now they raise others. They will not accept judgments. A robot, they say, has no right to brand this person or that as inferior. By accepting the orders of A in preference to that of B, B is branded as of less consequence than A and his human rights are violated."

"What is the answer to that?"

"There is none. I am giving up."

"I see."

"As far as I myself am concerned…Instead, I turn to you, George."

"To me?" George Ten's voice remained level. There was a mild surprise in it but it did not affect him outwardly. "Why to me?"

"Because you are not a man," said Harriman tensely. "I told you I want robots to be the partners of human beings. I want you to be mine."

George Ten raised his hands and spread them, palms outward, in an oddly human gesture. "What can I do?"

"It seems to you, perhaps, that you can do nothing, George. You were created not long ago, and you are still a child. You were designed to be not overfull of original information-it was why I have had to explain the situation to you in such detail-in order to leave room for growth. But you will grow in mind and you will come to be able to approach the problem from a non-human standpoint. Where I see no solution, you, from your own other standpoint, may see one."

George Ten said, "My brain is man-designed. In what way can it be non-human?"

"You are the latest of the JG models, George. Your brain is the most complicated we have yet designed, in some ways more subtly complicated than that of the old giant Machines. It is open-ended and, starting on a human basis, may-no, will-grow in any direction. Remaining always within the insurmountable boundaries of the Three Laws, you may yet become thoroughly non-human in your thinking."

"Do I know enough about human beings to approach this problem rationally? About their history? Their psychology?"

"Of course not. But you will learn as rapidly as you can."

"Will I have help, Mr. Harriman?"

"No. This is entirely between ourselves. No one else knows of this and you must not mention this project to any human being, either at U. S. Robots or elsewhere."

George Ten said, "Are we doing wrong, Mr. Harriman, that you seek to keep the matter secret?"

"No. But a robot solution will not be accepted, precisely because it is robot in origin. Any suggested solution you have you will turn over to me; and if it seems valuable to me, I will present it. No one will ever know it came from you."

"In the light of what you have said earlier," said George Ten calmly, "this is the correct procedure…When do I start?"

"Right now. I will see to it that you have all the necessary films for scanning."